Livespaces

Software Installation Manual

The software installation manual will provide a guide to installing the LiveSpaces software within a LiveSpace instance. There are two main types of software within a LiveSpace and the software installation manual will deal with both of these. The two types are:

- Integrated Services and Applications - Services and applications that are integrated via the LiveSpaces framework and operating environment. The deployment and installation process for these is fairly unified.

- Stand-Alone Applications - Applications that are stand-alone and not integrated via the LiveSpaces framework. The installation process for these applications will be unique to each application. In most cases these applications will gradually be moved across to become integrated services and applications.

Integrated Services and Applications

There are two different installers required for installing a Livespace instance:

- Server Installer (livespace-server-installer-X.X.exe) - installed on the main 'server' PC within the Livespace, taking note of the following:

- Select the Quicktime option if the PC will be used for playing any media items, for instance in Meta Applications.

- When asked for the 'Livespace configuration' enter the name of the configuration directory you want to store any customised configuration files for your particular Livespace (the name of the Livespace would probably be a good choice).

- When asked to select 'Additional Tasks' to be performed select the 'Install as Windows service' option if you want the Livespaces peer to run as a Windows service (i.e. if you want it to run whenever the PC is running). You should only select this option for PCs that are to be permanently situated within the Livespace.

- Make sure you modify

deploy/hosts.category_mapandservices/globals.propertiesfiles to reflect the correct setting of your Livespace. If you are using the Windows installer, it will have already done this for you, mapping the current host name as the server.

- Client Installer (livespace-client-installer-X.X.exe) - installed on all other PCs within the Livespace, taking note of the following:

- Select the Quicktime option if the PC will be used for playing any media items, for instance in Meta Applications.

- When asked for the URL of the Livespaces server (Livespace home URL) enter 'http://main_server:8090/livespaces' where main_server is the hostname for the main server PC within the Livespace (i.e. the PC you ran the server installer on).

- When asked for the 'Livespace configuration' enter the name of the configuration directory on the Livespace server where the customised configuration files are stored for your particular Livespace (as specified during the server installer).

- When asked to select 'Additional Tasks' to be performed select the 'Install as Windows service' option if you want the Livespaces peer to run as a Windows service (i.e. if you want it to run whenever the PC is running). You should only select this option for PCs that are to be permanently situated within the Livespace.

These two installers are basically identical as effectively they both install the Livespaces 'peer' on a PC, with the exception that the installer that we are calling the 'server' installer contains the bundles (jar files), and configuration files (e.g. the deploy and properties files), for all the available services and applications.

In a Livespace all these files/bundles are stored centrally on the 'server' and are retreived when the Livespaces peer starts up on a particular PC. What this means is that a Livespace peer as installed by both installers is simply a bootstrap, and container, for the centrally stored services and applications.

These installers can be passed command line arguments to enable default options to be selected. This will aid in installing Livespaces on many machines as it will mean you won't have to repeatedly type in the server URL, configuration name etc. To find out all the command-line options simply run the installer from the command line with the /? or /HELP option. To specify values for any of the command line arguments that require it simply append the argument with '=' and then the value for the argument (e.g. /CONFIG=test).

The command-line arguments that apply specifically to Livespaces are:

- /CONFIG - the name of the configuration directory to use

- /SERVER_URL - (Client Installer only) The URL of the Livespaces server

Also of interest will be the command-line options:

- /MERGETASKS - Specifies the tasks that should be initially selected (these tasks will be merged with the set of default tasks)

- Available tasks:

- installservice - Installs Livespaces as a Windows service

- programicon - Creates icons in the Start > Programs menu

- Available tasks:

- /COMPONENTS - Overrides the default components that are to be installed

- Available components:

- client - (Client Installer only) The Livespaces client

- server - (Server Installer only) The Livespaces server

- quicktime - Quicktime 7, to allow media playback capability

- Available components:

- /SILENT - Runs the installer in silent mode so that the wizard and window are not display but the installation progress window is. Note: when using silent mode you should use the command line arguments above to specify installer options.

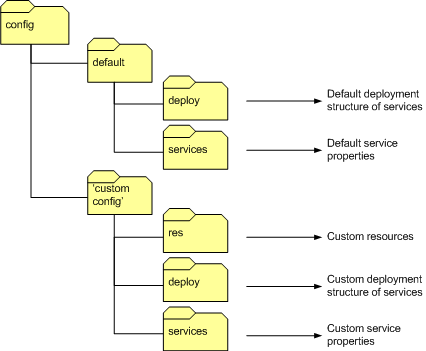

Following the installation you will need to set up the custom configuration files for your Livespace to specify where services will be run, and configuration properties of the services (see the Service Catalogue for a listing and description of all the available LiveSpace services). The configuration files reside on the Livespace server in the config sub-directory within the Livespaces Server installation directory. In the config directory you should see a directory named default and also directory corresponding to the name of the configuration directory you specified in the installation process. The diagram below shows the structure of these configuration directories.

The idea with these configuration directories is that any customisation you wish to do in terms of where services are to be run, or properties of services, should be saved into the custom configuration directory so that the default configuration is left untouched (although it may be modified as part of an upgrade). That is, the default configuration directory should NOT be modified and configuration properties you wish to customise for your Livespace should be defined in this configuration directory. All configuration files defined in the custom configuration directory will be merged with the those in the default directory, with properties in the custom configuration taking precendence over the defaults (i.e. customised properties override the defaults).

Service Allocation

The allocation of services (where services run) within a Livespace is configured through the set of files in the deploy sub-directory of a configuration directory. These files basically specify a map of what category each computer belongs to, and what services to run on each of the specified categories of computer. The three main files, or types of files, in this directory are:

host.category_map- Specifies the mappings of host names to categories (i.e. defines what category each host name belongs to)services.deploy_map- Defines what deployment configurations (.deployfiles) get run on each host or host categories..deployfiles - Deployment configuration sets that specify what services will be run, their relative load order and run levels.

Host Category Map

The host category map file (host.category_map) is used to map host names to categories, which can be used to flexibly control what services and service configurations are deployed to a host. The default property specifies what category hosts should be mapped to by default if their host name does not appear in this file. To assign particular host names to categories use the host property appended with the actual host name of the computer. For example:

host.myhostname=display

specifies that the computer with host name 'myhostname' belongs to category 'display'.

Services Deploy Map

The services deployment map file (services.deploy_map) specifies which deployment configuration sets (.deploy files) should be loaded for any given host name or host category. This allows the composition of deployment configuration sets for particular categories or hosts. The default property specifies what deployment configuration set(s) should be loaded by default, that is if the host or category for a Livespace peer does not exist in the file. To assign particular deployment configuration sets to categories use the category property appended with the category name (see example below). To assign particular deployment configuration sets to hosts use the host property appended with the host name (see example below). To compose multiple deployment configuration sets use the '+' character (see example below).

category.client=client category.server=client + server category.display=client + display host.somehostname=client + display

Deploy Files

The .deploy files specify what services (or more correctly bundles) will be run, their relative load order, and at what run levels.

The .deploy files within the default and custom configurations are different than all the other files within the configurations in that they are not merged. Custom .deploy files simply override the entire matching file in the default configuration. However, default .deploy files can be included in custom .deploy files with the use of the include command. The include command can also be used to include any other .deploy files (e.g. .deploy files from within the same configuration). To include .deploy files from other configurations (e.g. 'default') prepend the .deploy file you wish to include with the configuration name followed with a forward slash (e.g. to include the default client deployment configuration set use include default/client, or to include the 'core' .deploy file from the current configuration use include core).

The three commands install, start and uninstall are used to specify services/bundles that should be installed, started or uninstalled when the Livespaces peer is started.

install- specifies that the bundle should be installed but NOT started.start- specifies that the bundle should installed AND started.uninstall- specifies that the bundle should be uninstalled. This is useful for uninstalling a service/bundle that is included from a.deployfile that has been included into the current.deployfile.

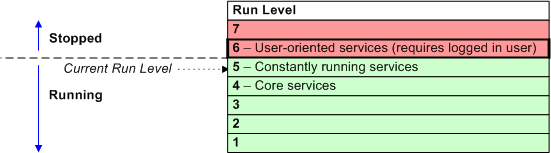

The OSGI standard allows for a set of run levels at which bundles (or services) can be specified to run. This means that the bundles running at any time on one system can be changed dynamically by changing the run level of the system (similar to runlevels in the linux operating system). Bundles defined to run at or below the current run level will continue, or begin, running, those above the current level will be stopped, or continue to be in a stopped state. In a LiveSpace we run core services at level 4, services that need to be run at all times (i.e. when the computer is logged in or logged out) at level 5, and services that run only when the computer is logged in at level 6.

To specify runlevels in the .deploy files use the initlevel command followed by the actual runlevel (integer). Any bundles that are declared to be started or installed following the initlevel declaration will apply at that runlevel, until another initlevel declaration occurs within the .deploy file. For instance, in the example below, the bundles livespace.comm-win32 and livespace.services.av.matrix have a runlevel of 5 and the bundle livespace.ui.teamscope has a runlevel of 6.

include default/client

uninstall livespace.services.media_viewer

initlevel 5

install livespace.comm-${platform}

start livespace.services.av.matrix

initlevel 6

install livespace.ui.teamscope

You should find that you won't need to change too much in terms of the deployment configuration other than the host.category_map to define what categories your specific host names belong to. You may want to also want to define new categories in which case you will need to create a services.deploy_map file and the .deploy files for the new categories.

Service Configuration

This section describes how to go about setting up properties of the services within the Livespace. You will probably need to change a few service properties due to the particular setup of your Livespace differing from the defaults although we have tried to make the service properties generic where possible to minimise the number of custom service properties required. The service properties are defined in the .properties files found in the services sub-directory of the custom configuration directory. The contents of the service property files are merged with the default properties, with custom properties taking precedence over (i.e. they override) default properties.

The globals.properties file (which will need to be modified) defines variables that apply across a number of services, and usually change from room to room. Properties defined in this file can be referenced within specific service property files using ${property}. When specifying the Livespace room name, within this file, you should choose a name which is not likely to change. If you do need to change the room name after the Livespace has been operating for a period of time please refer to How to Change the Room Name Guide

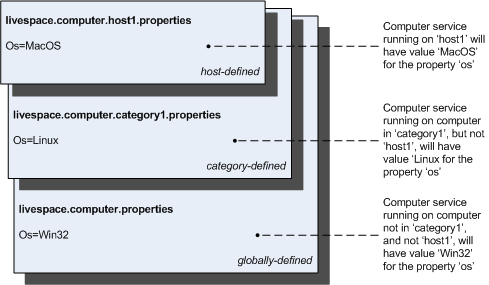

The actual service properties are defined using .properties files and can be defined at three different levels, globally, category-specific and host-specific.

- Global Properties - Apply to the service no matter where it is run (i.e. they apply to the service running on any host).

- Category-Specific Properties - Apply to the service only when it is running on the specified category of hosts.

- Host-Specific Properties - Apply to the service only when it is running on the specified host.

The property files are merged in that order, so that more specific properties (e.g. host-specific) override less specific (e.g. global), as shown below.

The naming of the property files is important and determines at what the level the property file applies to. The naming of the .properties files is as follows:

<service name>[-<category> | @<host>].properties

The service name is required and should be the full name of the service, as registered by the service itself. The category or host value is optional, and only one should be specified for a single file. Without specifying a category or host the file will apply globally, and if included the file will apply only for the specified category or host.

For example, in the listing of property files below the livespace.computer service properties file applies only to the service when it is running on host server1, the livespace.livepoint service properties file applies only to the service when it is running on hosts of category client, and the livespace.media service properties file applies to the service running anywhere.

livespace.computer@server1.properties livespace.livepoint-client.properties livespace.media.properties

Building and Deploying from Source

Building and deploying the Livespace will require the setting up of a development environment (e.g. Eclipse) as described in the Developer Training Pack. If you want to build a Livespaces Windows installer you will need to install Inno Setup, which is available for download from the Inno Setup homepage. To enable remote deployment of the bundles and configuration directory etc. you will need to share the Livespaces installation directory where you installed the Livespaces Server. Make sure the shared directory has Write permissions for users that will be remotely deploying bundles to the share.

You should create a custom configuration sub-directory in the config directory of the livespace.osgi project. This directory should be named the same as the custom configuration directory on the Livespaces server. If you already have a custom configuration directory for your Livespace setup on the Livespace server then I suggest you just take a copy of that and paste that into the config directory of the livespace.osgi project.

Within the root of your custom configuration directory you need to create two files (named as below) with the following properties defined within them:

build.propertieslivespace.shared_drive- The location of a general shared drive on the main server. This is where the Livespaces installers will be deployed to.livespace.server_directory- The directory on the server where Livespaces Server is installed.innosetup.dir- The path to the local directory where Inno Setup is installed. This is used for building the installer.tomcat.webapps- The sharedwebappsdirectory of Tomcat on the server (to host Ignite).

installer.propertiesjre.installer- The full path to the location of a JRE installer.quicktime.installer- The full path to the location of a Quicktime 7 installer.

You can use UNC paths for the directory locations, e.g. livespace.server_directory=//main_server/livespaces.

You may need to download a JRE installer from the Java J2SE download page and save this to the location you specified with the jre.installer property. Similarly for the Quicktime installer, which can be downloaded from the Quicktime download page.

Once you have completed the configuration of all the services (deploy, properties files etc.) the Livespaces services/bundles can be built and deployed. To do this run the all target of the Ant build script located in the livespace.osgi directory. This target will build and deploy all the Livespace services and the Ignite web application.

You will also need to run the deploy_win32_installer target of the same build file to build and deploy the Livespaces installers for Windows. The dist_client_zip will build a zip file of all the files required to run Livespaces on a non-Windows based computer.

Stand-Alone Applications

Sticker

To install Sticker simply download the relevant installer package from the Sticker homepage and run the installer.

Obsolete Components

Before Ignite was rewritten as a Dashboard applet, it was deployed as a web application in Tomcat. See the Ignite Web Application Installation page if you want to find out how it was installed.